papers

2024

-

VideoSAVi: Self-Aligned Video Language Models without Human SupervisionYogesh Kulkarni, and Pooyan FazliarXiv preprint arXiv:2412.00624, 2024

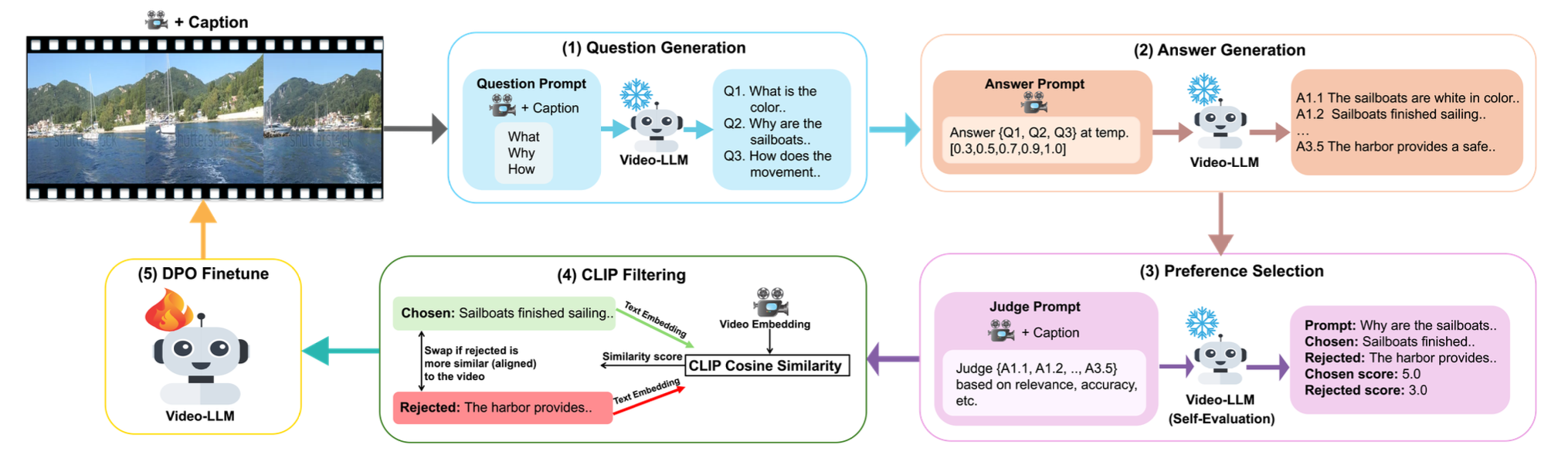

VideoSAVi: Self-Aligned Video Language Models without Human SupervisionYogesh Kulkarni, and Pooyan FazliarXiv preprint arXiv:2412.00624, 2024Recent advances in vision-language models (VLMs) have significantly enhanced video understanding tasks. Instruction tuning (i.e., fine-tuning models on datasets of instructions paired with desired outputs) has been key to improving model performance. However, creating diverse instruction-tuning datasets is challenging due to high annotation costs and the complexity of capturing temporal information in videos. Existing approaches often rely on large language models to generate instruction-output pairs, which can limit diversity and lead to responses that lack grounding in the video content. To address this, we propose VideoSAVi (Self-Aligned Video Language Model), a novel self-training pipeline that enables VLMs to generate their own training data without extensive manual annotation. The process involves three stages: (1) generating diverse video-specific questions, (2) producing multiple candidate answers, and (3) evaluating these responses for alignment with the video content. This self-generated data is then used for direct preference optimization (DPO), allowing the model to refine its own high-quality outputs and improve alignment with video content. Our experiments demonstrate that even smaller models (0.5B and 7B parameters) can effectively use this self-training approach, outperforming previous methods and achieving results comparable to those trained on proprietary preference data. VideoSAVi shows significant improvements across multiple benchmarks: up to 28% on multi-choice QA, 8% on zero-shot open-ended QA, and 12% on temporal reasoning benchmarks. These results demonstrate the effectiveness of our self-training approach in enhancing video understanding while reducing dependence on proprietary models.

2021

-

EnsembleNTLDetect: An Intelligent Framework for Electricity Theft Detection in Smart GridYogesh Kulkarni, Sayf Hussain Z, Krithi Ramamritham, and 1 more author2021 International Conference on Data Mining Workshops (ICDMW), 2021

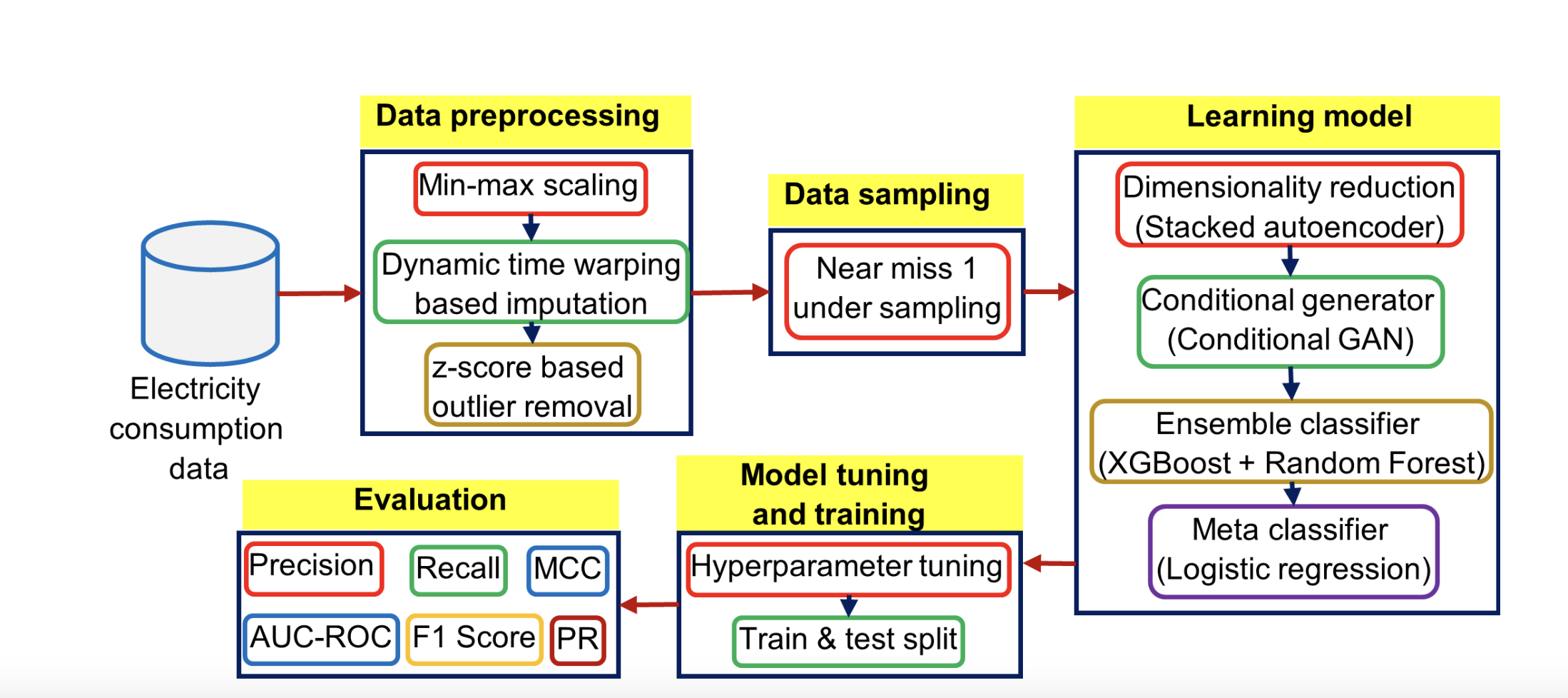

EnsembleNTLDetect: An Intelligent Framework for Electricity Theft Detection in Smart GridYogesh Kulkarni, Sayf Hussain Z, Krithi Ramamritham, and 1 more author2021 International Conference on Data Mining Workshops (ICDMW), 2021Artificial intelligence-based techniques applied to the electricity consumption data generated from the smart grid prove to be an effective solution in reducing Non Technical Loses (NTLs), thereby ensures safety, reliability, and security of the smart energy systems. However, imbalanced data, consecutive missing values, large training times, and complex architectures hinder the real time application of electricity theft detection models. In this paper, we present EnsembleNTLDetect, a robust and scalable electricity theft detection framework that employs a set of efficient data pre-processing techniques and machine learning models to accurately detect electricity theft by analysing consumers’ electricity consumption patterns. This framework utilises an enhanced Dynamic Time Warping Based Imputation (eDTWBI) algorithm to impute missing values in the time series data and leverages the Near-miss undersampling technique to generate balanced data.Further, stacked autoencoder is introduced for dimensionality reduction and to improve training efficiency. A Conditional Generative Adversarial Network (CTGAN) is used to augment the dataset to ensure robust training and a soft voting ensemble classifier is designed to detect the consumers with aberrant consumption patterns. Furthermore, experiments were conducted on the real-time electricity consumption data provided by the State Grid Corporation of China (SGCC) to validate the reliability and efficiency of EnsembleNTLDetect over the state-of-the-art electricity theft detection models in terms of various quality metrics.

-

Kryptonite: An Adversarial Attack Using Regional FocusYogesh Kulkarni, and Krisha BhambaniApplied Cryptography and Network Security Workshops, 2021

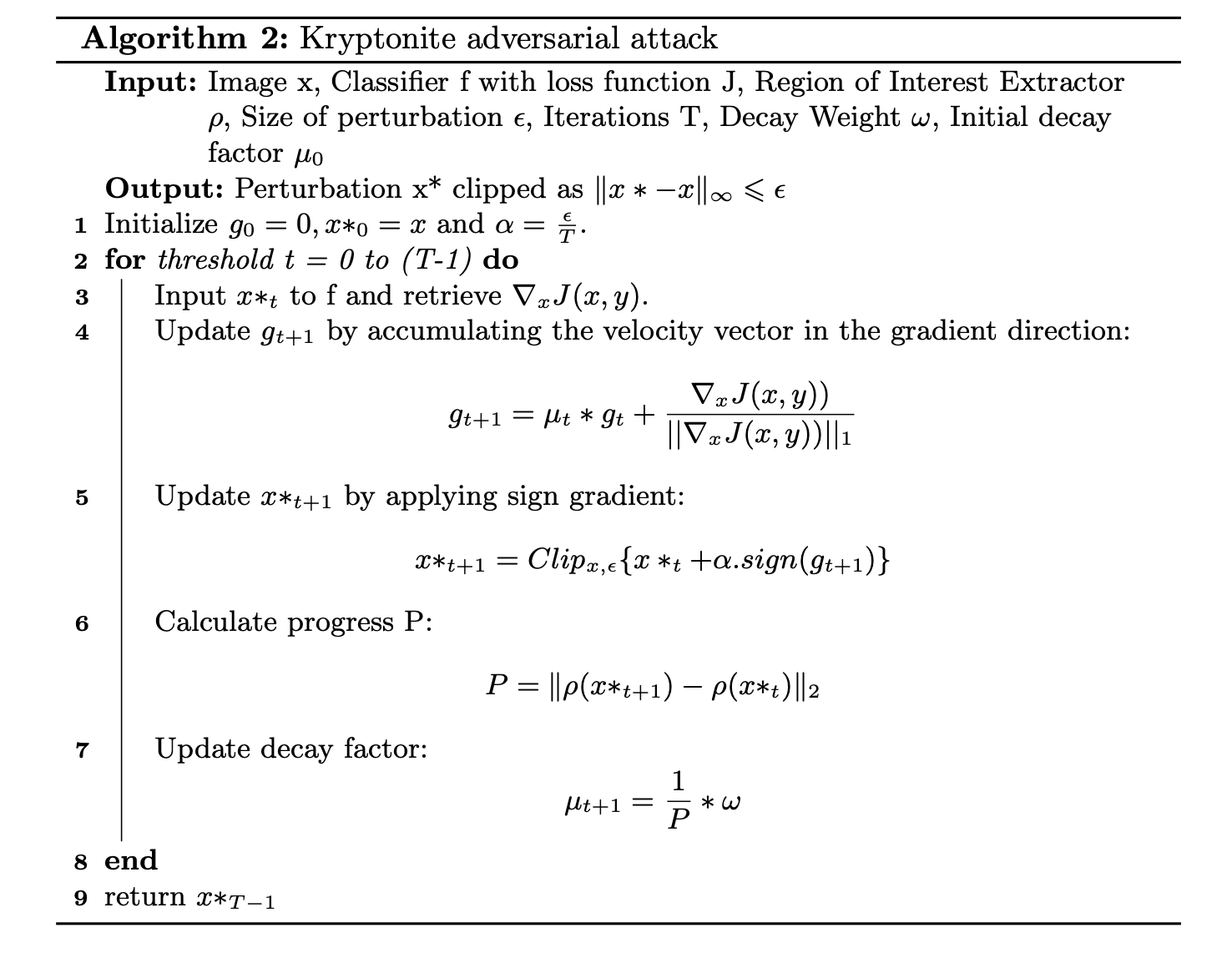

Kryptonite: An Adversarial Attack Using Regional FocusYogesh Kulkarni, and Krisha BhambaniApplied Cryptography and Network Security Workshops, 2021With the Rise of Adversarial Machine Learning and increasingly robust adversarial attacks, the security of applications utilizing the power of Machine Learning has been questioned. Over the past few years, applications of Deep Learning using Deep Neural Networks(DNN) in several fields including Medical Diagnosis, Security Systems, Virtual Assistants, etc. have become extremely commonplace, and hence become more exposed and susceptible to attack. In this paper, we present a novel study analyzing the weaknesses in the security of deep learning systems. We propose ‘Kryptonite’, an adversarial attack on images. We explicitly extract the Region of Interest (RoI) for the images and use it to add imperceptible adversarial perturbations to images to fool the DNN. We test our attack on several DNN’s and compare our results with state of the art adversarial attacks like Fast Gradient Sign Method (FGSM), DeepFool (DF), Momentum Iterative Fast Gradient Sign Method (MIFGSM), and Projected Gradient Descent (PGD). The results obtained by us cause a maximum drop in network accuracy while yielding minimum possible perturbation and in considerably less amount of time per sample. We thoroughly evaluate our attack against three adversarial defence techniques and the promising results showcase the efficacy of our attack.

2020

-

Intensive Image Malware Analysis and Least Significant Bit Matching SteganalysisYogesh Kulkarni, and Anurag Gorkar2020 IEEE International Conference on Big Data (Big Data), 2020

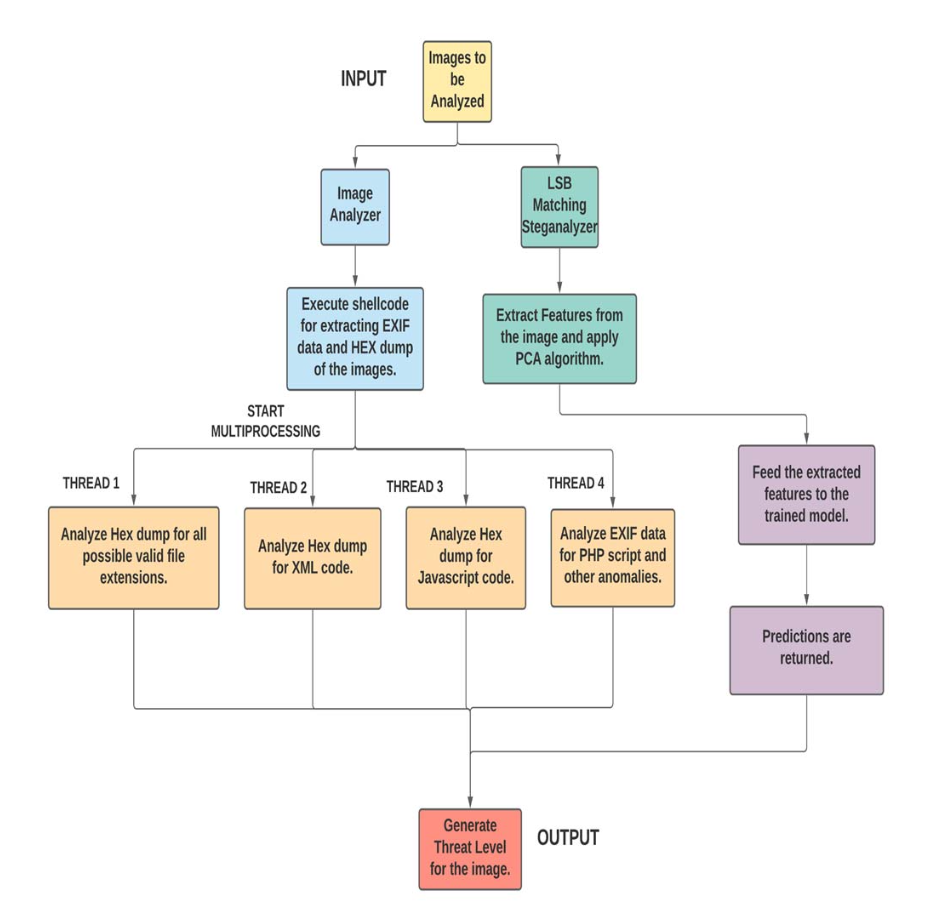

Intensive Image Malware Analysis and Least Significant Bit Matching SteganalysisYogesh Kulkarni, and Anurag Gorkar2020 IEEE International Conference on Big Data (Big Data), 2020Malware as defined by Kaspersky Labs is "a type of computer program designed to infect a legitimate user’s computer and inflict harm on it in multiple ways." The exponential growth of the internet has led to a significant escalation in malware attacks which affect the multitude often leading to disastrous consequences. One of the most minacious methods of malware unfurling is through images. In this paper we analyze the following methods of embedding malicious payloads in images: 1) Disguising PHP/ASM web shells inside Exchangeable Image File Format i.e. EXIF data of an image. 2) An injection vulnerability that conceals Cross-Site Scripting (XSS) in the EXIF data to execute malicious payloads when the image is uploaded to a browser. 3) Feigning a malicious executable file in a zipped .sfx file format as an 4) Splitting the attack payload into safe decoder and pixel encoded code. 5) Least Significant Bit (LSB) Matching Steganography technique used for pernicious payload embedding in image pixel data. After extensive analysis of these malware embedding techniques, we present ‘AnImAYoung’, an image malware analysis framework that thoroughly examines given images for the presence of any kind of anomalous content.Our framework utilizes ensemble methods to detect miniature statistical changes in images using machine learning, where the LSB Matching Steganography technique was used for payload embedding, which increases the accuracy of the framework. The framework achieves excellent performance by applying sophisticated computing algorithms and can be easily integrated with organizations working with Big Data providing them with a robust malware security option. This study describes the need and a practical approach to tackle this novel method of malware dissemination.